Brains of Sand 2 Thoughts as Programs

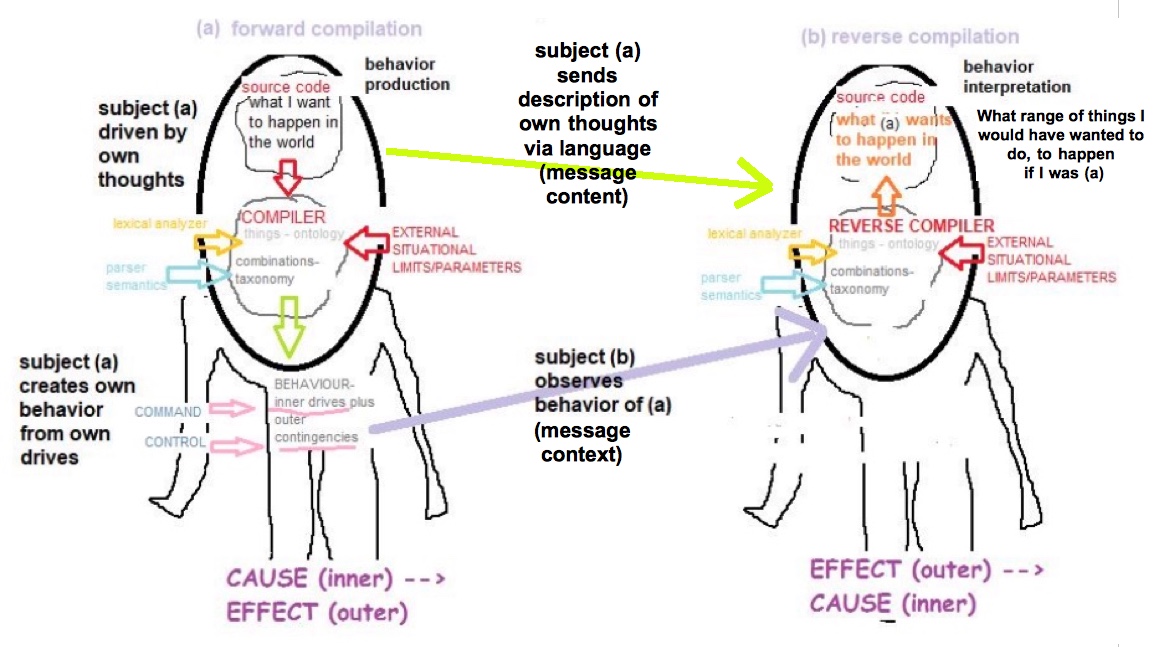

Figure 2.1(a)

Figure 2.1(b)

This discussion extends the ideas about linguistic cognition and cognitive language developed in the previous section. It begins by using Occam's Razor* together with Pierce's Hook** (an heuristic combination that is frequently found to be so useful as to warrant its own hackronym 'ORPH3US') to suggest a minimal model, namely that our thoughts bear superficial resemblance to computer programs. We extend this simple model to suggest that language is externalised thought. There is an increasing amount of evidence that supports this model. In this simplified model, we use our thoughts to generate our own behaviour, and we use our speech/text to communicate our subjective/narrative experience to others, thereby enabling the same goal-seeking behaviour in others.

The first of many problems with this simple model is that while some language statements are (conveniently) concrete and imperative ("Choose another shirt!"), the majority of them contain abstract, even metaphoric, descriptions ("That colour clashes with your aura."). We can break these objections into two parts. First we suggest that abstraction in language influences behaviour indirectly, via beliefs and emotionality. Therefore we need to deal with emotions and belief later.

^^^^ Figure 2.1 can be understood as being conditionally supportive of the Ideo-Motor Principle (IMP). These principles include the following ideas-

(a) Ideomotor theory suggests that actions are represented by their perceivable effects. TDE theory also makes this claim, but doesn't derive it from ideomotor principles, but from more a fundamental source, namely William James' original common coding arguments, ie that effects are the common code used to represent BOTH causes and effects.

(b) Ideomotor theory also implies that ongoing action affects (skews) perception of concurrent events. TDE theory does not unequivocally support all instances of (b), as is shown by the following two contrasting scenarios. Situation A- Imagine you are seated on a swivelling chair. An assistant spins you and the chair around. Clearly the action (spinning) affects perception of concurrent events, eg the total movements of the assistant as you perceive them consist of the rotation of the chair plus any movements the assistant makes independently of that rotation. However, you are not fooled, because your mind easily separates your own spinning from any collateral motion made by other actors, eg by the assistant while she waits for the chair to slow down to a stop. Situation B- you are a passenger in a car, which has stopped at a red light. You are slumped low in your seat, and cannot see the ground. The car next to yours creeps ahead, anticipating the signals' transition from red to green. You are startled because it suddenly feels as if the car in which you are seated has moved backward significantly.

*start simple, only introduce complexity where it is absolutely necessary to explain observations.

^ mathspeak for 'If and only if'

**also called retroduction or abductive logic invented by Charles Sanders Pierce. Retroduction is preferred terminology since adduction and abduction are also used by physiotherapists to indicate limb movement toward and away from the body's centerline, respectively. For convenience, the combination of Occam and Pierce principles is called ORPH3US.

***Stanford Encyclopaedia of Philosophy

****The adoption of Subjective Stance (SS) requires no conscious thought or effort, indeed, it forms part of the implicit set of structural rules (axioms) which guide both individual behaviour and evolutionary development.

To augment this model in a way which includes animals and humans as both being part of the same evolutionary hierarchy, consider Figure 2.1^^^^. Both animals and humans can observe their own kind and infer mental state from observed behaviour by adopting the subjective stance, ie assuming that the fellow creature they observe has the same or similar background experience and metabolic needs (and therefore learned responses and behavioural goals). By imagining/remembering themselves in that situation, and speculating/recalling what they would do/did, both humans and animals are able to do a good job of inferring the mental state of other selves, iff^ they adopt the subjective stance****.

That animals do this is an uncontroversial claim- we observe them doing it often. But humans can also cooperate successfully in busy, complex situations without language. Driving in traffic is one of the most common examples of where people who don't know each other (and therefore can't assume situational behaviour codes from interpersonal history ) nevertheless manage to cooperate safely most of the time.

Observation is a form of behavioural communication which depends on empathy. It could not work without the subjective stance, in which each subject models other subjects as internally identical to themselves. Note that, in this model of language and thought, there are two information streams that must be taken into consideration, the manifest (overt) language stream, and the latent (covert) image stream - see figure 2.1(b) above.

Therefore we can extend the model's assumptions as follows-

(i) that knowledge is internalised semantics - specifically, autobiographical knowledge (episodic memory) is internalised serial semantics, and semantic memory (general knowledge) is internalised parallel semantics^^.

(ii)spoken language is externalised serial syntax, interpreted by utterance when spoken, compiled by paragraph when written.

(iii) body language/behaviour (embodiment and embedded situational codes) is externalised parallel syntax

(iv) thought is internalised serial semantics, and is therefore equivalent in every way to autobiographical/narrative knowledge

(v) thought is 'compiled' into an intermediate visuospatial form (mental imagery^^^) equivalent to internalised parallel syntax which is executable by a cybernetic machine (cybermaton) in the same sense that compiled object code is executable by a Turing equivalent Finite State Machine (FSM).

NOTE that mental execution in a Cybermaton occurs declaratively, via drive-state differential reduction NOT procedural execution (fetch and execute instructions and data). Another name for the Cybermaton is a GOLEM, the goal-oriented differential process model depicted in Figure 2.2

^^ Endel Tulving's pioneering research on memory architecture divides memory along two axes. The horizontal axis divides declarative from procedural memory, while the vertical axis sub-divides declarative knowledge into the episodic subtype in the left cerebral hemisphere from the semantic subtype in the right cerebral hemisphere.

^^^There is much evidence that the brain supports eidetic (ie realistic, concrete, representational) mental imagery, eg see Shepard & Metzler.

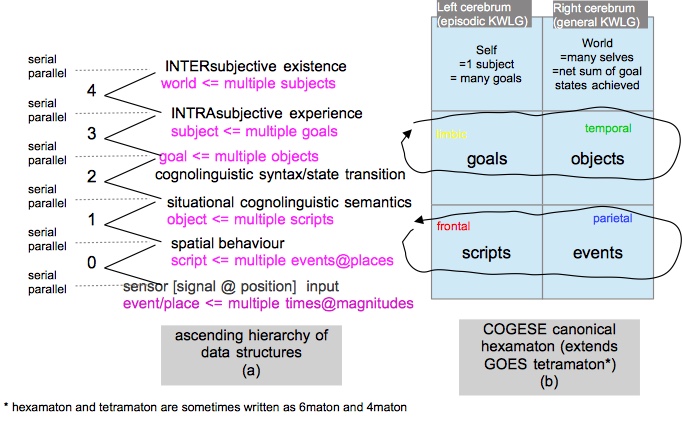

Figure 2.2

The data structure (DS) of the input channel is a representation; think of a photo, diagram or a graphic - an array of stimuli at known (fixed) locations on the body, or evaluated from these places. Think SEMANTICS- why? Because in the input channel, the networks/hierarchies of neuronal connections are bottom-up, and therefore CONVERGENT. As the signal ascends the tree, each child node links to its sole unambiguous parent node. This is the technical (computer science 101) reason that semantics convey meaning unambiguously, why Montague, and not Chomsky grammar rules the input side.

- The DS of the output channel is a reproduction; think of a piece of code computing a time series of 'positions' for a given set of sensor 'locations', a 'microbehaviour'. Think Chomsky and his recursive grammar generators, think SYNTAX - why? Because in the output channel, the networks/hierarchies of neuronal connections are top-down, and therefore DIVERGENT. As the signal descends the tree, each parent node links to any one of its children nodes. This is the reason that syntax conveys choice, why Chomsky, and not Montague grammar rules the input side.

- Careful rereading of the two paragraphs above should reveal a further important pair of observations-

- (i) that the input channel spans the organism's spatial extent, and can therefore be modelled with minimal error as a spatial function describing the incremental slice of time dt.

- (ii) that the output channel, being the functional 'dual' or 'complement' of the input channel, must span the organism's temporal extent- ergo, it can be modelled with minimal error as a temporal function which describes the incremental slice of space ds.

At the top level of the GOLEM system, subject's goals are input as (voluntary) commands. Since these goals involve habitual or preferred state transitions, they take the form of syntax, where this term is interpreted broadly (as per Chomsky's I-language/ Fodor's LOT). This syntax term is serially interpreted, as a string, if the organism is a human, and can use language. Otherwise, if animal, or non-linguistic human, this syntax term is interpreted as a set of parallel threads, for internal use by the organism. In other words, the subject uses this kind of parallel syntax to convert its own thoughts into own behaviours. Typically, each of the organism's limbs will be allocated a goal, ie a position for the self-object to be located at, at that space and time slot. The result is a spatiotemporal trajectory which causes each sub-goal to be completed.

Speech involves a stream of symbols, to be interpreted by the recipient's subjective process. Thought, however, involves a graphical representation of the organism's own behaviour region. BUT at the next level down, thought, which is parallel goal-space syntax, is equivalent to serial object-space semantics, or mental imagery (imagination, mental emulation)^^.

^^see LCCM theory- figure 2.4 This diagram is included to demonstrate the uselessness of depictions which lack anatomic reference. Where are these LCCM categories located? If they can't be located physically, can they be isolated virtually, and if not, why not? TDE/GOLEM theory does not confuse the reader in this way. If a given cognitive category exists in TDE/GOLEM theory, the reader will be able to locate it in the brain with ease.

*This mechanism, co-discovered (independently) by both researchers, is the only viable mechanism which avoids the requisite invocation of the erroneous concept of efference copy.

Figure 2.3

COGESE neuroanatomical subsystem ('wetware') is a multi-level data hierarchy/executive protocol

In figure 2.3 (a), the unifying latticework underlying the COGESE hexagesic architecture is shown to be an extension to the GOLEM's rising chain of paired, convergent parallel-to-serial processing paradigms. The processing type is given in the left column of 2.3(a), while the processor (platform) type is given in the right column. Note that the levels in the two columns do not align- this is expected when dataflow direction is ascending, since each software level (left col.) inputs data from the lower of the nearest lower 'hardware' platform and outputs data to the nearest upper 'hardware' platform.

Consider the lowest level- it describes a relationship between three linguistic entities, the symbolic (events), the syntactic (scripts) and the semantic (objects). In plain English, scripts specify the manner in which events form (are interpreted as) objects. As we move up the order, this pattern is repeated. Next level up, objects define how scripts are combined into goals. Then, up a level, and goals describe how objects are combined into a subject's behaviors, and so on.

In Figure 2.3 (b) above, the basic GOLEM comp/unit architecture, known by the acronyms GOES (or GETS) is formed from the familiar four submodules. The basic four-part GOLEM/GOES/GETS scheme is sufficient to model animal thought, but if human level cognition must be modelled, it must be enhanced by the addition of two higher-level abstract functions, labelled 'Effects'(objective behavioural observations) and 'Causation'(subjective experiential experimentation). The longer (increased from four-part to six-part) acronym is tentatively given as COGESE (inspired by Fodor's MENTALESE). The 'causes' column indicates what syntax, goal-pathways are most attractive, triggers that are most compelling to the organism. Later, this column is developed into the Left Cerebrum's Language pathway. The 'effects' column indicates what semantics, methods or behaviours are most common, and therefore most likely to be encountered. Later, this column is developed into the Right Cerebrum's Feeling/Consciousness pathway.

The 'Effects' column vertically integrates input-channel memory, from low-level classical conditioning (CC, remember how ringing the bell caused the dog to salivate because it associated the sound with the expectation of a meal) to high-level conscious awareness. An important part of being conscious of an entity is remembering its name, and general knowledge concerning its customary situations and expected occasions, all of which are logico-linguistic functions. Reasoning and language skills are intimately interrelated types of explicit memory- low-level voluntary conative mnemonics.

The 'Causes' column vertically integrates output-channel memory, from low-level operant learning (OL, remember how the pigeon eventually learned to peck at the feed-slot lever to obtain its reward of a satisfying portion of birdseed) to multi-level preference assessor we call emotion.